Posts Tagged ‘Python’

How to Program a SunFounder PiDog

Introduction

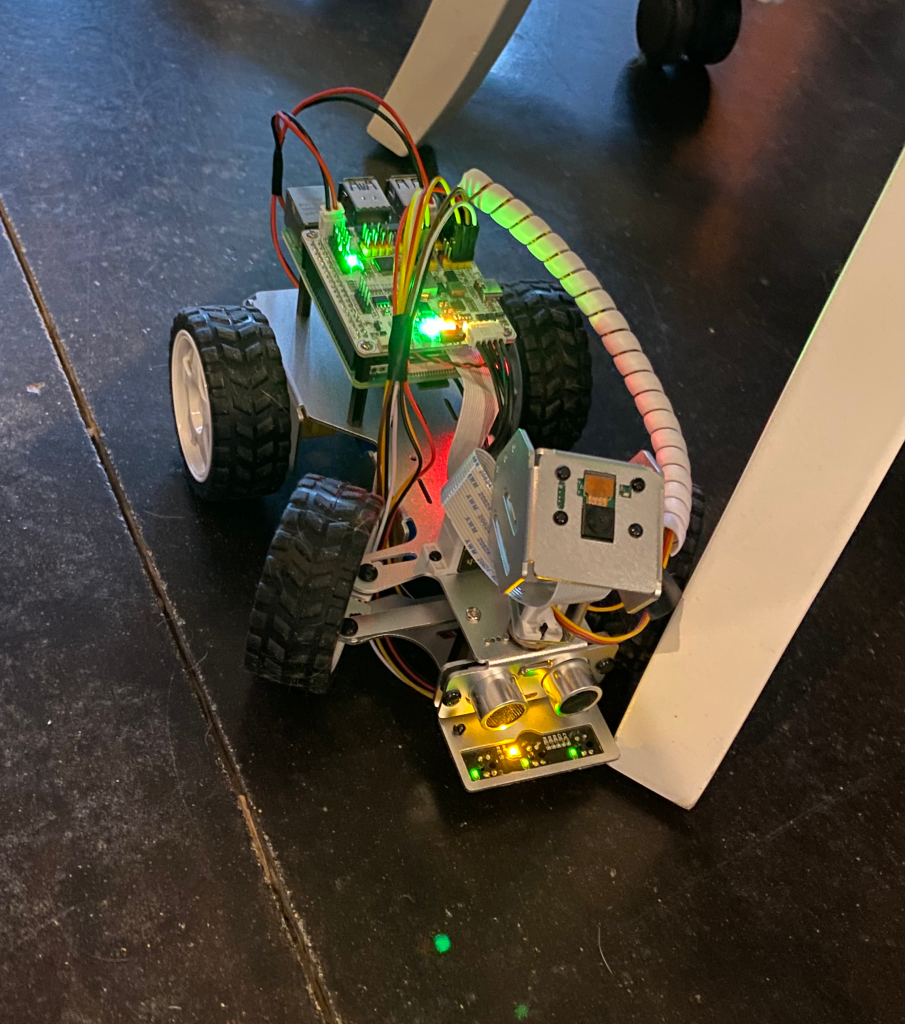

Last time, the SunFounder PiDog was introduced, this time we’ll introduce how to program the PiDog to do our bidding. Previously, we looked at the SunFounder PiCar and how to program it. Both robots share the same RobotHat to interface the Raspberry Pi with the various servos, motors and devices attached to the robot. For the PiCar this is fairly simple as you just need to turn on the motors to go and set the steering servo to the angle you want to turn. The PiDog is much more complicated. There are eight servo motors that control the legs. On each leg, one servo sets the angle of the shoulder joint and the other sets the angle of the elbow joint. To get the PiDog to walk takes coordinating the setting of each of these servos in a coordinated fashion. SunFounder provides a PiDog Python class that hides most of the complexity, though it does give you direct access if you want to control the legs directly.

Introduction to the PiDog Class

To use the PiDog class, you need to instantiate a PiDog object and then start issuing commands. A common initialization sequence is:

dog = Pidog()

dog.do_action('stand', speed=80)

dog.wait_all_done()

The first line creates a PiDog object to use, then the next line calls the do_action method which has a long list of standard actions. In the case of ‘stand’, it will set the eight leg servo motors to put the PiDog in the standing position. This is much easier than having to set all eight servo positions ourselves. Then you need to wait for this operation to complete before issuing another command, which is accomplished by calling the wait_all_done method.

The PiDog class uses multithreading so you can issue head and leg related commands at the same time and they are performed on separate threads. The head is reasonably complex as the neck consists of three servo motors. Similarly for the tail which is controlled by one servo motor. So you could start leg, head and tail commands before calling wait_all_done. Then you are free to issue another set of commands. If you issue a new command while a previous one is executing then the library will throw an exception.

To get the PiDog to move we use commands like the following actions:

dog.do_action('trot', step_count=5, speed=98)

dog.do_action('turn_right', step_count=5, speed=98)

dog.do_action('backward', step_count=5, speed=98)

There are also apis to use the speaker, read the ultrasonic distance sensor and set the front LED strip such as:

dog.speak("single_bark_1")

dog.ultrasonic.read_distance()

dog.rgb_strip.set_mode('breath', 'white', bps=0.5)

To use the camera, it is exactly the same as for the PiCar using the vilib library, as this is the same camera as the PiCar, connected in the same manner. The sample program below uses the vilib program to compare successive images to see if the PiDog is stuck, though this doesn’t work very well as the legs cause so much movement that the images are rarely the same.

Sample Program

I took the PiCar Roomba program and modified it to work with the PiDog. It doesn’t work as well as a Roomba, as the PiDog is quite a bit slower than the PiCar and the standard actions to turn the PiDog have quite a large turning radius. It might be nice if there were some standard actions to perhaps turn 180 degrees in a more efficient manner. The listing is at the end of the article.

Documentation

At the lowest level, all the source code for the Python classes like PiDog.py are included which are quite interesting to browse. Then there is a set of sample programs. The standard set is documented on the website, then there is a further set in a test folder that is installed, but not mentioned on the website. The website then has an easy coding section that explains the various parts of the PiDog class. Although there isn’t a reference document, I found the provided docs quite good and the source code easy enough to read for the complete picture.

Summary

Although coordinating eight servo motors to control walking is quite complicated, the PiDog class allows you to still control basic operations without having to understand that complexity. If you did want to program more complicated motion, like perhaps getting the PiDog to gallop then you are free to do so. I found programming the PiDog fairly straightforward and enjoyable. I find the way the PiDog moves quite interesting and this might give you a first look at more complicated robotic programming like you might encounter with a robot arm with a fully articulated hand.

from pidog import Pidog

import time

import random

from vilib import Vilib

import os

import cv2

import numpy as np

SPIRAL = 1

BACKANDFORTH = 2

STUCK = 3

state = SPIRAL

StartTurn = 80

foundObstacle = 40

StuckDist = 10

lastPhoto = ""

currentPhoto = ""

MSE_THRESHOLD = 20

def compareImages():

if lastPhoto == "":

return 0

img1 = cv2.imread(lastPhoto)

img2 = cv2.imread(currentPhoto)

if img1 is None or img2 is None:

return(MSE_THRESHOLD + 1)

img1 = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY)

img2 = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY)

h, w = img1.shape

try:

diff = cv2.subtract(img1, img2)

except:

return(0)

err = np.sum(diff**2)

mse = err/(float(h*w))

print("comp mse = ", mse)

return mse

def take_photo():

global lastPhoto, currentPhoto

_time = time.strftime('%Y-%m-%d-%H-%M-%S',time.localtime(time.time()))

name = 'photo_%s'%_time

username = os.getlogin()

path = f"/home/{username}/Pictures/"

Vilib.take_photo(name, path)

print('photo save as %s%s.jpg'%(path,name))

if lastPhoto != "":

os.remove(lastPhoto)

lastPhoto = currentPhoto

currentPhoto = path + name + ".jpg"

def executeSpiral(dog):

global state

dog.do_action('turn_right', step_count=5, speed=98)

dog.wait_all_done()

distance = round(dog.ultrasonic.read_distance(), 2)

print("spiral distance: ",distance)

if distance <= foundObstacle and distance != -1:

state = BACKANDFORTH

def executeUnskick(dog):

global state

print("unskick backing up")

dog.speak("single_bark_1")

dog.do_action('backward', step_count=5, speed=98)

dog.wait_all_done()

time.sleep(1.2)

state = SPIRAL

def executeBackandForth(dog):

global state

distance = round(dog.ultrasonic.read_distance(), 2)

print("back and forth distance: ",distance)

if distance >= StartTurn or distance == -1:

dog.do_action('trot', step_count=5, speed=98)

dog.wait_all_done()

elif distance < StuckDist:

state = STUCK

else:

dog.do_action('turn_right', step_count=5, speed=98)

dog.wait_all_done()

time.sleep(0.5)

def main():

global state

try:

dog = Pidog()

dog.do_action('stand', speed=80)

dog.wait_all_done()

time.sleep(.5)

dog.rgb_strip.set_mode('breath', 'white', bps=0.5)

Vilib.camera_start(vflip=False,hflip=False)

while True:

take_photo()

if state == SPIRAL:

executeSpiral(dog)

elif state == BACKANDFORTH:

executeBackandForth(dog)

elif state == STUCK:

executeUnskick(dog)

if compareImages() < MSE_THRESHOLD:

state = STUCK

finally:

dog.close()

if __name__ == "__main__":

main()

Adding Vision to the SunFounder PiCar-X

Introduction

Last time, we programmed a SunFounder PiCar-X to behave similar to a Roomba, to basically move around a room following an algorithm to go all over the place. This was a first step and had a few limitations. The main one is that it could easily get stuck, since if there is nothing in front of the ultrasonic sensor, then it doesn’t detect it is stuck. This blog post adds some basic image processing to the algorithm, so if two consecutive images from the camera are the same, then it considers itself stuck and will try to extricate itself.

The complete program listing is at the end of this posting.

Using Vilib

Vilib is a Python library provided by SunFounder that wraps a collection of lower level libraries making it easier to program the PiCar-X. This library includes routines to control the camera, along with a number of AI algorithms to detect objects, recognize faces and recognize gestures. These are implemented as Tensorflow Lite models. In our case, we’ll use Vilib to take pictures, then we’ll use OpenCV, the open source computer vision library to compare the images.

To use the camera, you need to import the Vilib library, start the camera and then you can take pictures or video.

from vilib import Vilib

Vilib.camera_start(vflip=False,hflip=False)

Vilib.take_photo(name, path)

Most of the code is to build the name and path to save the file. The code uses the strftime routine to add the time to the file name. The resolution of this routine is seconds, so you have to be careful not to take pictures less than a second apart or the simple algorithm will get confused.

Using OpenCV

To compare two consecutive images, we use some code from this Tutorialspoint article by Shahid Akhtar Khan. Since the motor is running, the PiCar-X is vibrating and bouncing a bit, so the images won’t be exactly the same. This algorithm loads the two most recent images and converts them to grayscale. It then subtracts the two images, if they are exactly the same then the result will be all zeros. However this will never be the exact case. What we do is calculate the mean square error (MSE) and then compare that to a MSE_THRESHOLD value, which from experimentation, we determined a value of 20 seemed to work well. Calculating MSE isn’t part of OpenCV and we use NumPy directly to do this.

Error Handling

Last week’s version of this program didn’t have any error handling. One problem was that the reading the ultrasonic sensor failed now and again returning -1, which would trigger the car to assume it was stuck and backup unnecessarily. The program now checks for -1. Similarly taking a picture with the camera doesn’t always work, so it needs to check if the returned image is None. Strangely every now and then the size of the picture returned is different causing the subtract call to fail, this is handled by putting it in a try/except block to catch the error. Anyway, error handling is important and when dealing with hardware devices, they do happen and need to be handled.

Operation

I left the checks for getting stuck via the ultrasonic sensor in place. In the main loop in the main routine, the program takes a picture at the top, executes a state and then compares the pictures at the end. This seems to work fairly well. It sometimes takes a bit of time for the car to get sufficiently stuck that the pictures are close enough to count as the same. For instance when a wheel catches a chair leg, it will swing around a bit, until it gets stuck good and then the pictures will be the same and it can back out. The car now seems to go further and gets really stuck in fewer places, so an improvement, though not perfect.

Summary

Playing with programming the PiCar-X is fun, most things work pretty easily. I find I do most coding with the wheels lifted off the ground, connected to a monitor and keyboard, so I can debug in Thonny. Using the Vilib Python library makes life easy, plus you have the source code, so you can use it as an example of using the associated libraries like picamera and OpenCV.

from picarx import Picarx

import time

import random

from vilib import Vilib

import os

import cv2

import numpy as np

POWER = 20

SPIRAL = 1

BACKANDFORTH = 2

STUCK = 3

state = SPIRAL

StartTurn = 80

foundObstacle = 40

StuckDist = 10

spiralAngle = 40

lastPhoto = ""

currentPhoto = ""

MSE_THRESHOLD = 20

def compareImages():

if lastPhoto == "":

return 0

img1 = cv2.imread(lastPhoto)

img2 = cv2.imread(currentPhoto)

if img1 is None or img2 is None:

return(MSE_THRESHOLD + 1)

img1 = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY)

img2 = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY)

h, w = img1.shape

try:

diff = cv2.subtract(img1, img2)

except:

return(0)

err = np.sum(diff**2)

mse = err/(float(h*w))

print("comp mse = ", mse)

return mse

def take_photo():

global lastPhoto, currentPhoto

_time = time.strftime('%Y-%m-%d-%H-%M-%S',time.localtime(time.time()))

name = 'photo_%s'%_time

username = os.getlogin()

path = f"/home/{username}/Pictures/"

Vilib.take_photo(name, path)

print('photo save as %s%s.jpg'%(path,name))

if lastPhoto != "":

os.remove(lastPhoto)

lastPhoto = currentPhoto

currentPhoto = path + name + ".jpg"

def executeSpiral(px):

global state, spiralAngle

px.set_dir_servo_angle(spiralAngle)

px.forward(POWER)

time.sleep(1.2)

spiralAngle = spiralAngle - 5

if spiralAngle < 5:

spiralAngle = 40

distance = round(px.ultrasonic.read(), 2)

print("spiral distance: ",distance)

if distance <= foundObstacle and distance != -1:

state = BACKANDFORTH

def executeUnskick(px):

global state

print("unskick backing up")

px.set_dir_servo_angle(random.randint(-50, 50))

px.backward(POWER)

time.sleep(1.2)

state = SPIRAL

def executeBackandForth(px):

global state

distance = round(px.ultrasonic.read(), 2)

print("back and forth distance: ",distance)

if distance >= StartTurn or distance == -1:

px.set_dir_servo_angle(0)

px.forward(POWER)

time.sleep(1.2)

elif distance < StuckDist:

state = STUCK

time.sleep(1.2)

else:

px.set_dir_servo_angle(40)

px.forward(POWER)

time.sleep(5)

time.sleep(0.5)

def main():

global state

try:

px = Picarx()

px.set_cam_tilt_angle(-90)

Vilib.camera_start(vflip=False,hflip=False)

while True:

take_photo()

if state == SPIRAL:

executeSpiral(px)

elif state == BACKANDFORTH:

executeBackandForth(px)

elif state == STUCK:

executeUnskick(px)

if compareImages() < MSE_THRESHOLD:

state = STUCK

finally:

px.forward(0)

if __name__ == "__main__":

main()

Programming the SunFounder PiCar-X to be a Roomba

Introduction

Last time, I introduced SunFounder’s PiCar-X, a robot car controlled by a Raspberry Pi. The previous article covered assembly and initial setup. In this article, we’ll look at writing a simple program for the PiCar-X written in Python, namely to simulate a Roomba.

Learning the Object Model

There doesn’t seem to be a User Guide or Reference Manual for programming the PiCar-X, instead there are quite a few examples and a number of YouTube tutorials. The Python libraries provided by SunFounder are all written in Python and the full source code is provided. The main Picarx object is built on top of a Robot_Hat library. The Picarx library is high level and easy to follow, whereas the lower level RobotHat library translates the desired actions into commands to directly control the hardware.

If you want a reference manual, you can generate one with PyDocs using the pydoc option in the command such as:

python3 -m pydoc picarx

python3 -m pydoc robot_hat

In the programs below, we create a Picarx object and then use that to control the car:

px = Picarx() # Create a Picarx object called px

px.forward(speed) # Go forward set speed

px.backward(speed) # Go backwards at speed

px.set_dir_servo_angle(angle) # Steer to specific angle

px.ultrasonic.read() # Read the ultrasonic sensor for distance

As you can see, a programmer only needs to know a handful of commands to control the PiCar-X. It’s worth browsing $HOME/picar-x/picarx/picarx.py to see all the methods available to you.

Stopping the Car

All the examples, as well as my program shown later, contain a finally clause to stop the motor when the program finishes. If you start the motor running, then it will keep on running forever until told to stop, whether your program is still running or not. When you are debugging the program, sometimes the program will crash or you stop it in the debugger. It is annoying to have the car still going when the program is stopped, especially if you have the car tethered to a monitor and keyboard.

To help with this, I created a stopcar.py program that I can have loaded in a Thonny window and run whenever I need the motor stopped.

from picarx import Picarx

def main():

try:

px = Picarx()

px.forward(0)

finally:

px.forward(0)

I found this to be a useful program that I use frequently. It also shows about the simplest PiCar-X program possible, importing the picarx library, creating an object and then setting the forward speed to zero.

If the PiCar-X was a Roomba

If I want my PiCar to play with the dogs, one approach is to have it automatically drive all over the place and somehow extract itself whenever it gets stuck. I wondered how it would be if I programmed it to behave like a Roomba vacuum cleaner. The Roomba tries to cover all the floor space in an area. Roombas have added sophistication to their algorithms over the years, however the original algorithm was fairly simple. Basically the Roomba would spiral outwards until it hits something, which it would assume is a wall and then start doing a lawn mowing type sweep starting by going along the wall it found. This works great in rectangular rooms with no obstacles, but that doesn’t represent the reality of any home. In practice the Roombat needs a way to extract itself when stuck and to then resume going when it is unstuck.

The Roomba algorithm isn’t precise, there are computer science algorithms like Dijkstra’s algorithm that can be used, but these tend to be inefficient as it will return to the starting point whenever stuck, so will tend to run down the battery, with all the backtracking, before it can finish exploring the room. Also devices like the Roomba and PiCar-X don’t have an accurate positioning system, making it easy to get disoriented when skidding or being bumped, making it hard to build an accurate map of the space.

Anyway, here is my first attempt, where I create a simple state machine to control the PiCar. There are three states:

- In spiral mode, spiraling outward until an obstacle is encountered.

- Back and forth mode, going back and forth until stuck.

- Stuck mode, where the PiCar tries backing up and randomly turning to unstick itself.

The program is fairly simple:

from picarx import Picarx

import time

import random

POWER = 20

SPIRAL = 1

BACKANDFORTH = 2

STUCK = 3

state = SPIRAL

StartTurn = 80

foundObstacle = 40

StuckDist = 10

spiralAngle = 40

def executeSpiral(px):

global state, spiralAngle

px.set_dir_servo_angle(spiralAngle)

px.forward(POWER)

time.sleep(0.5)

spiralAngle = spiralAngle - 5

if spiralAngle < 5:

spiralAngle = 40

distance = round(px.ultrasonic.read(), 2)

print("spiral distance: ",distance)

if distance <= foundObstacle:

state = BACKANDFORTH

def executeUnskick(px):

global state

print("unskick backing up")

px.set_dir_servo_angle(random.randint(-50, 50))

px.backward(POWER)

time.sleep(0.5)

state = SPIRAL

def executeBackandForth(px):

global state

distance = round(px.ultrasonic.read(), 2)

print("back and forth distance: ",distance)

if distance >= StartTurn:

px.set_dir_servo_angle(0)

px.forward(POWER)

time.sleep(1)

elif distance < StuckDist:

state = STUCK

else:

px.set_dir_servo_angle(40)

px.forward(POWER)

time.sleep(5)

time.sleep(0.5)

def main():

global state

try:

px = Picarx()

while True:

if state == SPIRAL:

executeSpiral(px)

elif state == BACKANDFORTH:

executeBackandForth(px)

elif state == STUCK:

executeUnskick(px)

finally:

px.forward(0)

if __name__ == "__main__":

main()

A video of the car in action: https://youtube.com/shorts/RDfo_hKAG8Y?feature=share.

This actually works fairly well, though not entirely like a Roomba. One difference between a real Roomba and a PiCar-X is that the Roomba’s turning radius is quite small, whereas the PiCar-X has quite a large turning radius. Further, the PiCar-X tends to skid on my smooth polished cement floors enlarging the turning radius even more. To make a U-turn is a bit subjective, but I found turning for five seconds was about right. A more precise way of executing a 180 degree turn would be nice.

The routine to unstick the car by randomly turning and backing up works fairly well, as long as the car detects its stuck, it seems to be able to clear the obstacle. However the car can get stuck if there is nothing in front of the ultrasonic sensor. For instance if the front wheel gets caught on something like a chair leg, but there is nothing infront of the sensor, such as in the picture below.

Improvements

To avoid the problem of the PiCar-X getting stuck, but not being able to detect that from the ultrasonic sensor, a cool way around this would be to use the camera. Then compare camera images and if they don’t change, assume we are stuck and try to unstick ourselves. Even when stuck, the wheels are often spinning and the car is vibrating. This will cause images to differ, but we could train an AI model to decide if the car is still moving or now stuck.

Similarly we could devise an AI model to build a map of the space. If the camera gets a number of pictures of the room, could an AI model use these and output a map of the room? Or if given a map of the room, could an AI model determine a better algorithm to traverse the room in an optimal manner?

Anyway, things to consider for future projects.

Summary

The Python programming model for the PiCar-X is fairly easy to use and figure out. Getting this program up and running didn’t take long and given the simplicity of the program worked quite well. This program only used one of the PiCar-X’s sensors and didn’t use any machine learning. The next step would be to develop more sophisticated algorithms and use all the sensors to help with the navigation problem.

My Raspberry Pi Learns to Drive

Introduction

I received an early XMas present of a SunFounder PiCar-X. This is a car that is controlled via a Raspberry Pi, it contains a number of sensors, including a pan-tilt camera, ultrasonic module and a line tracking module, so you can experiment with self-driving programs. It is capable of avoiding obstacles, following objects, tracking lines, and recognizing objects. You can program it using either Python or EzBlocks (a Scratch-like language). It costs under $100 and includes a battery that powers the whole thing, including the on-board Pi. This article is an overview of the assembly and setup process.

Which Pi to Use?

Since I received my Raspberry Pi 5, I figured that I’d use my 8Gig Raspberry Pi 4 for the robot car. However this Pi 4 has an active cooler attached, so won’t fit. So I switched this with the 4Gig Pi 4 I’ve been using to track aircraft. I still had to pry off one heat sink to make it fit under the robot controller hat.

The PiCar-X supports the Raspberry Pi 4B, 3B, 3B+, and 2B. Note that there isn’t support for the Pi 5 yet. Also for all the software to work, you have to use the older Raspberry Pi OS Bullseye. The claim is that some Python components don’t work with Bookworm yet.

Assembly

When you open the box, there is a four page spread with the assembly instructions. I followed these instructions and was able to assemble the car fairly easily. There were a couple of hard to tighten screws to hold one of the stepper motors in place inside an aluminum holder, but as these things go it wasn’t bad and didn’t take too long. One nice thing is that the kit includes lots of extra screws, ratchets and washers. Anywhere where four screws are required, the kit provides six. So I never ran out of screws and have lots of extras for the future. It also included screwdrivers, wrenches and electrical tape.

All assembled, but now what? The instructions showed how to assemble it, but not what to do next. So I went to the web and found the SunFounder docs online here. I followed along installing the right version of Raspberry Pi OS to an SD card and then booting up the Raspberry Pi. I connected the Pi to a monitor, keyboard and mouse to do the initial setup, though doing this via secure remote signon would have been fine as well. Installing the various Python components and libraries was easy enough. Might be nice if they provided a script to do all the steps for you, rather than a lot of copy/paste of various Linux commands.

Turning On?

Next up, turn the robot on and play with the sample Python programs. Ok, how do you turn the robot on? Supposedly there is a power switch on the robot hat, so which yes there is, but in a different place than the documentation. Turning it on, did nothing. Hmmm. Fortunately, I thought to try plugging the Raspberry Pi’s power adapter into the USB-C port on the robot hat rather than the USB-C port on the Pi. When I did this the board came to life. Apparently the battery it came with was completely dead and it is charged via the USB-C port on the robot hat. Reviewing the documentation, this is mentioned nowhere. Generally using the PiCar-X, you may as well always use the robot hat’s USB-C port rather than Pi. I think they should mention this. The robot hat’s USB-C port isn’t even mentioned in the documentation.

Calibration?

Once I had it powered up, I could try the sample Python programs. The first ones they want you to run are meant to calibrate the stepper motors. Ok, fair enough, however the diagrams show doing this before assembling the robot? Funny this has not been mentioned anywhere until now. Anyway I did the best I could with the robot already assembled and it seems to work fine. I don’t know how important this step is, but if it is important and should be done during assembly, then it should be highlighted in the assembly instructions.

Running

Next there are some simple Python programs where you press a key to move forward, turn right, etc. At this point you want to untether the robot from any monitor, keyboard, mouse and external power. Put it on the floor and experiment a bit. Once untethered you need to use ssh to remotely sign in to the onboard Pi and run the various Python samples that way. Fiddly and rather primitive way to control the robot, but these samples show the basic building block you can use in your own programs to control the car. These are nice and simple programs, so it’s easy to follow how they work.

Phone App

There is an iOS/Android app to control the car from your phone/tablet. You have to have a specific program running on the Pi to receive and execute commands from the phone.

The phone app is a bit of a toolbox where you select various components on the screen to control the robot. There is a tutorial that guides you through creating a working control panel for your device. Note this app can control a variety of SunFounder robot products.

Clicking on edit for a control or the plus sign if there isn’t a control lets you add something:

I didn’t follow the instructions very carefully and received Python errors when I tried to control my car. It was a simple matter to see that the Python program is quite picky that you put the correct controls in the correct places. If you want to customize the app controller, then you have to make matching changes to both the control layout in the app as well as how the Python program running on the Pi reacts to the data it receives. The different controls send data in different formats and you get Python data mismatch errors if they don’t match.

Once I set the app up correctly, then I could control the robot from the app and use it to chase my dogs around the house.

Summary

This robot is intended to allow you to play with programming a robot car and to this end it provides all the tools you need to do this. The documentation is a bit lacking in places and it appears the robot hat has been upgraded without the documentation being updated to match, but this shouldn’t stump any true DIYer. If you are looking for a remote control car to control from your phone, there are simpler, easier to use products out there. The idea here is that you have a fairly powerful computer, in this case a Raspberry Pi 4, that can run various machine learning algorithms, run complicated Python programs and allow you to write sophisticated programs to control the car. For under $100, this is a great platform to play with all these technologies.

ArduPy on the Wio Terminal

Introduction

Last week, we introduced Seeed Studio’s Wio Terminal and wrote a small program in C using the Arduino IDE. This week, we’ll replicate the same program in Python using ArduPy, Seeed’s version of MicroPython for the Wio Terminal and Seeeduino XIAO. In this article we’ll look at what’s involved in working with ArduPy along with some of its features and limitations.

What is ArduPy?

ArduPy is an open source adaptation of MicroPython that uses the Arduino API to talk to the underlying device. The goal is to make adding MicroPython support easier for hardware manufacturers, so that if you develop Arduino support for your device, then you get MicroPython support for free. There are a large number of great microcontrollers out there and for board and system manufacturers, and the most time consuming part of getting a new board to market is developing the SDK. Further, great boards run the risk of failing in the market if the software support isn’t there. Similarly, a programmer buying a new board, really likes to be able to use familiar software and not to have to learn a whole new SDK and development system from scratch.

When you develop an Arduino program, you include the libraries used and the program then runs on the device. In the case of ArduPy, the Python runtime is the running Arduino program, but what libraries does it contain? Seeed developed a utility, aip, to rebuild the ArduPy runtime and include additional libraries. This lets you save memory by not including a bunch of libraries you aren’t using, but still have the ability to find and include the libraries you need.

The downside of ArduPy is that currently there isn’t Python IDE integration. As a partial workaround there is REPL support (Read Evaluate Print Loop), which lets you see the output of print statements and execute statements in isolation.

You need to flash the ArduPy runtime to the device, after that the device will boot with a shared drive that you can save a file boot.py that is run everytime the device boots, or main.py which is run every time it is saved.

Our Koch Snowflake Program Again

We described the Koch Snowflake last time and implemented it in C. The following is a Python program, where I took the C program and edited the syntax into shape for Python. I left in a print statement so we can see the output in REPL. The screenshot above shows the program running.

from machine import LCD

import math

lcd = LCD() # Initialize LCD and turn the backlight

lcd.fillScreen(lcd.color.BLACK) # Fill the LCD screen with color black

lcd.setTextSize(2) # Setting font size to 2

lcd.setTextColor(lcd.color.GREEN) # Setting test color to Green

turtleX = 0.0

turtleY = 0.0

turtleHeading = 0.0

DEG2RAD = 0.0174532925

level = 3

size = 200

turtleX = 320/8

turtleY = 240/4

def KochSnowflakeSide(level, size):

print(“KochSnowFlakeSide ” + str(level) + ” ” + str(size))

if level == 0:

forward( size )

else:

KochSnowflakeSide( level-1, size / 3 )

turn( 60 )

KochSnowflakeSide( level-1, size / 3)

turn( -120 )

KochSnowflakeSide( level-1, size / 3)

turn(60)

KochSnowflakeSide( level-1, size / 3)

def forward(amount):

global turtleX, turtleY

newX = turtleX + math.cos(turtleHeading * DEG2RAD) * amount

newY = turtleY + math.sin(turtleHeading * DEG2RAD) * amount

lcd.drawLine(int(turtleX), int(turtleY), int(newX), int(newY), lcd.color.WHITE)

turtleX = newX

turtleY = newY

def turn(degrees):

global turtleHeading

turtleHeading += degrees

turn( 60 )

KochSnowflakeSide( level , size)

turn( -120 )

KochSnowflakeSide( level, size)

turn( -120 )

KochSnowflakeSide( level, size)

turn( 180 )

Developing and Debugging

Seeed’s instructions are good on how to set up a Wio Terminal for ArduPy, but die out a bit on how to actually develop programs for it. Fortunately, they have a good set of video tutorials that are necessary to watch. I didn’t see the tutorials until after I got my program working, and they would have saved me a fair bit of time.

I started by developing my program in a text editor and then saving it as main.py on the Wio. The program did nothing. I copied the program to Thonny, a Python IDE, which reported the most blatant syntax errors that I fixed. I started debugging by outputting strings to the Wio screen, which showed me how far the program ran before hitting an error. Repeating this, I got the program working. Then I found the video tutorials.

The key is to use REPL, which is accessed via a serial port simulated on the USB connection to your host computer. The tutorial recommended using putty, which I did from my Raspberry Pi. With this you can see the output from print statements and you can execute Python commands. Below is a screenshot of running the program with the included print statement.

I tried copy and pasting the entire Python program into putty/REPL, but copy/paste doesn’t work well in putty and it messes up all the indentation, which is crucial for any Python program. When I write my next ArduPy program, I’m going to find a better terminal program than putty, crucially, one where cut/paste works properly.

Using putty/REPL isn’t as good as debugging in a proper Python IDE, but I found I was able to get my work done, and after all we are programming a microcontroller here, not a full featured laptop.

Summary

ArduPy is an interesting take on MicroPython. The library support for the Wio Terminal is good and it does seem to work. Being able to use an IDE would be nice, but you can get by with REPL. Most people find learning Python easier than learning C, and I think this is a good fit for anyone approaching microcontrollers without any prior programming experience.

Playing with Julia 1.0 on the Raspberry Pi

Introduction

A couple of weeks ago I saw the press release about the release of version 1.0 of the Julia programming language and thought I’d check it out. I saw it was available for the Raspberry Pi, so I booted up my Pi and installed it. Julia has been in development since 2012, it was created by four MIT professors as an open source project for mathematical computing.

Why Julia?

Most people doing data science and numerical computing use the Python or R languages. Both of these are open source languages with huge followings. All new machine learning projects need to integrate to these to get anywhere. Both are very productive environments, so why do we need a new one? The main complaint about Python and R is that these are interpreted languages and as a result are very slow when compared to compiled languages like C. They both get around this by supporting large libraries of optimized code written in C, C++, Assembler and Fortran to give highly optimized off the shelf algorithms. These work great, but if one of these doesn’t apply and you need to write Python loops to process a large data set then it can get really frustrating. Another frustration with Python is that it doesn’t have a built in array data type and relies on the numpy and pandas libraries. Between these you can do a lot, but there are holes and strange differences between the two systems.

Julia has a powerful builtin array type and most of the array manipulation features of numpy and pandas are built in to the core language. Further Julia was created from scratch around powerful new just in time (JIT) compiler technology to provide both the speed of development of an interpreted language combined with the speed of a compiled language. You don’t get the full speed of C, but it’s close and a lot better than Python.

The Julia language borrows a lot of features from Python and I find programming in it quite similar. There are tuples, sets, dictionaries and comprehensions. Functions can return multiple values. For loops work very similarly to Python with ranges (using the : built into the language rather than the range() function).

Julia can call C functions directly (meaning you can get pointers to objects), and this allows many wrapper objects to have been created for other systems such as TensorFlow. This is why Julia is very precise about the physical representation of data types and the ability to get a pointer to any data.

Julia uses the end keyword to terminate blocks of code, rather than Pythons forced indentation or C’s semicolons. You can use semicolons to have multiple statements on one line, but don’t need them at the end of a line unless you want it to return null.

Julia has native built in support of most numeric data types including complex numbers and rational numbers. It has types for all the common hardware supported ints and floats. Then it also has arbitrary precision types build around GNU’s bignum library.

There are currently 1906 registered Julia packages and you can see the emphasis on scientific computing, along with machine learning and data science.

The creators of Julia always keep performance at the top of mind. As a result the parallelization support is exceptional along with the ability to run Julia code on CUDA NVidia graphics cards and easily setup clusters.

Is Julia Ready for Prime Time?

As of the time of this writing, the core Julia 1.0 language has been released and looks quite good. Many companies have produced impressive working systems with the 0.x versions of Julia. However right now there are a few problems.

- Although Julia 1.0 has been released, most of the add on packages haven’t been upgraded to this version yet. In the first release you need to add the Pkg package to add other packages to discourage people using them yet. For instance the library with GPIO support for the Pi is still at version 0.6 and if you add it to 1.0 you get a syntax error in the include file.

- They have released the binaries for all the versions of Julia, but these haven’t made them into the various package management systems yet. So for instance if you do “sudo apt install julia” on a Raspberry Pi, you still get version 0.6.

Hopefully these problems will be sorted out fairly quickly and are just a result of being too close to the bleeding edge.

I was able to get Julia 1.0 going on my Raspberry Pi by downloading the ARM32 files from Julia’s website and then manually copying them over the 0.6 release. Certainly 1.0 works much better than 0.6 (which segmentation faults pretty much every time you have a syntax error). Hopefully they update Raspbian’s apt repository shortly.

Julia for Machine Learning

There is a TensorFlow.jl wrapper to use Google’s TensorFlow. However the Julia group put out a white paper dissing the TensorFlow approach. Essentially TensorFlow is a separate programming language that you use from another programming language like Python. This results in a lot of duplication and forces the programmer to operate in two different paradigms at once. To solve this problem, Julia has the Flux machine learning system built natively in Julia. This is a fairly powerful machine learning system that is really easy to use, reducing the learning curve to getting working models. Hopefully I’ll write a bit more about Flux in a future article.

Summary

Julia 1.0 looks really promising. I think in a month or so all the add-on packages should be updated to the 1.0 level and all the binaries should make it out to the various package distribution repositories. In the meantime, it’s a good time to learn Julia and you can accomplish a lot with the core language.

I was planning to publish a version of my LED flashing light program in Julia, but with the PiGPIO package not updated to 1.0 yet, this will have to wait for a future article.

Playing with my Raspberry Pi

Introduction

I do most of my work (like writing this blog posting) on my MacBook Air laptop. I used to have a good desktop computer for running various longer running processes or playing games. Last year the desktop packed it in (it was getting old anyway), so since then I’ve just been using my laptop. I wondered if I should get another desktop and run Ubuntu on it, since that is good for machine learning, but I wondered if it was worth price. Meanwhile I was intrigued with everything I see people doing with Raspberry Pi’s. So I figured why not just get a Raspberry Pi and see if I can do the same things with it as I did with my desktop. Plus I thought it would be fun to learn about the Pi and that it would be a good toy to play with.

Setup

Since I’m new to the Raspberry Pi, I figured the best way to get started was to order one of the starter kits. This way I’d be able to get up and running quicker and get everything I needed in one shot. I had a credit with Amazon, so I ordered one of the Canakits from there. It included the Raspberry Pi 3, a microSD card with Raspbian Linux, a case, a power supply, an electronics breadboard, some leds and resistors, heat sinks and an HDMI cable. Then I needed to supply a monitor, a USB keyboard and a USB mouse (which I had lying around).

Setting up was quite easy, though the quick setup instructions were missing a few steps like what to do with the heatsinks (which was obvious) or how to connect the breadboard. Setup was really just install the Raspberry Pi motherboard in the case, add the heat sinks, insert the microSD card and then connect the various cables.

As soon as I powered it on, it displayed an operating system selection and installation menu (with only one choice), so clicked install and 10 minutes later I was logged in and running Raspbian.

The quick setup guide then recommends you set your locale and change the default password, but they don’t tell you the existing password, which a quick Google reveals as “Raspberry”. Then I connected to our Wifi network and I was up and running. I could browse the Internet using Chromium, I could run Mathematica (a free Raspberry version comes pre-installed), run a Linux terminal session. All rather painless and fairly straight forward.

I was quite impressed how quickly it went and how powerful a computer I had up and running costing less than $100 (for everything) and how easy the installation and setup process was.

Software

I was extremely pleased with how much software the Raspberry Pi came with pre-installed. This was all on the provided 32Gig card, which with a few extra things installed, I still have 28Gig free. Amazingly compact. Some of the pre-installed software includes:

- Mathematica. Great for Math students and to promote Mathematica. Runs from the Wolfram Language which is interesting in itself.

- Python 2 and 3 (more on the pain of having Python 2 later).

- LibreOffice. A full MS Office like suite of programs.

- Lots of accessories like file manager, calculator, image viewer, etc.

- Chromium web browser.

- Two Java IDEs.

- Sonic Pi music synthesizer.

- Terminal command prompt.

- Minecraft and some Python games.

- Scratch programming environment.

Plus there is an add/remove software program where you can easily add many more open source Pi programs. You can also use the Linux apt-get command to get many other pre-compiled packages.

Generally I would say this is a very complete set of software for any student, hobbyist or even office worker.

Python

I use Python as my main goto programming language these days and generally I use a number of scientific and machine learning libraries. So I tried installing these. Usually I just use pip3 and away things go (at least on my Mac). However doing this caused pip3 to download the C++/Fortran source code and to try to compile it, which failed. I then Googled around on how to best install these packages.

Unfortunately most of the Google results were how to do this for Python 2, which I didn’t want. It will be so nice when Python 2 finally is discontinued and stops confusing everything. I wanted these for Python 3. Before you start you should update apt-get’s list of available software and upgrade all the packages on your machine. You can do this with:

sudo apt-get update # Fetches the list of available updates

sudo apt-get upgrade # Strictly upgrades the current packages

What I found is I could get most of what I wanted using apt-get. I got most of what I wanted with:

sudo apt-get install python3-numpy

sudo apt-get install python3-scipy

sudo apt-get install python3-matplotlib

sudo apt-get install python3-pandas

However I couldn’t find and apt-get module for SciKit Learn the machine learning library. So I tried pip3 and it did work even though it downloaded the source code and compiled it.

pip3 install sklearn –upgrade

Now I had all the scientific programming power of the standard Python libraries. Note that since the Raspberry Pi only has 1Gig RAM and the SD Card only has twenty something Gig free, you can’t really run large machine learning tasks. However if they do fit within the Pi then it is a very inexpensive way to do these computations. What a lot of people do is build clusters of Raspberry Pi’s that work together. I’ve seen articles on how University labs have built supercomputers out of hundreds or Pi’s all put together in a cluster. Further they run quite sophisticated software like Hadoop, Docker and Kubernetes to orchestrate the whole thing.

Summary

I now have the Raspberry Pi up and running and I’m enjoying playing with Mathematica and Sonic Pi. I’m doing a bit of Python programming and browsing the Internet. Quite an amazing little device. I’m also impressed with how much it can do for such a low cost. As other vendors like Apple, Microsoft, HP and Dell try to push people into more and more expensive desktops and laptops, it will be interesting to see how many people revolt and switch to the far more inexpensive DIY type solutions. Note that there are vendors that make things like Raspberry Pi complete desktop computers at quite a low cost as well.

The Road to TensorFlow – Part 9: TensorBoard

Introduction

We’ve spent some time developing a Neural Network model for predicting the stock market. TensorFlow has produced a fairly black box implementation that is trained by historical data and then can output predictions for tomorrow’s prices.

But what confidence do we have that this model is really doing what we want? Last time we discussed some of the meta-parameters that configure the model. How do we know these are vaguely correct? How do we know if the weights we are training are converging? If we want to step through the model, how do we do that?

TensorFlow comes with a tool called TensorBoard which you can use to get some insight into what is happening. You can’t easily just print variables since they are all internal to the TensorFlow engine and only have values when required as a session is running. There is also the problem with how to visualize the variables. The weights matrix is very large and is constantly changing as you train it, you certainly don’t want to print this out repeatedly, let alone try to read through it.

To use TensorBoard you instrument your program. You tell it what you want to track and assign useful names to those items. This data is then written to log files as your model runs. You then run the TensorBoard program to process these log files and view the results in your Web Browser.

Something Went Wrong

Due to household logistics I moved my TensorFlow work over to my MacBook Air from running in an Ubuntu VM image on our Windows 10 laptop. Installing Python 3, TensorFlow and the various other libraries I’m using was quite simple and straight forward. Just install Python from Python.org and then use pip3 to install any other libraries. That all worked fine. But when I started running the program from last time, I was getting NaN results quite often. I wondered if TensorFlow wasn’t working right on my Mac? Anyway I went to debug the program and that led me to TensorBoard. As it turns out there was quite a bad bug in the program presented last time due to un-initialized variables.

You tend to get complacent programming in Python about un-initialized variables (and array subscript range errors) because usually Python will raise and exception if you try to use a variable that hasn’t been initialized. The problem is NumPy which is a library written in C for efficiency. When you create a NumPy array, it is returned to Python, telling Python its good to go. But since its managed by C code you don’t get the usual Python error checking. So when I changed the program to add the volumes to the price changes, I had a bug that left some of the data arrays uninitialized. I suspect on the Windows 10 laptop that these were initialized to zero, but that all depends on which exact C runtime is being used. On the Mac these values were just random memory and that immediately led to program errors.

Adding the TensorBoard initialization showed the problem was originating with the data and then it was fairly straight forward to zero in on the problem and fix it.

As a result, for this article, I’m just going to overwrite the Python file from last time with a newer one (tfstocksdiff2.py) which is posted here. This version includes TensorBoard instrumentation and a couple of other improvements that I’ll talk about next time.

TensorBoard

First we’ll start with some of the things that TensorBoard shows you. If you read an overview of TensorFlow it’s a bit confusing about what are Tensors and what flows. If you’ve looked at the program so far, it shows quite a few algebraic matrix equations, but where are the Tensors? What TensorFlow does is break these equations down into nodes where each node is a function execution and the data flows along the edges. This is a fairly common way to evaluate algebraic expressions and not unique to TensorFlow. TensorFlow then supports executing these on GPUs and in distributed environments as well as providing all the node types you need to create Neural Networks. TensorBoard gives you a way to visualize these graphs. The names of the nodes are from the program instrumentation.

When the program was instrumented it grouped things together. Here is an expansion of the trainingmodel box where you can see the operations that make up our model.

This gives us some confidence that we have constructed our TensorFlow graph correctly, but doesn’t show any data.

We can track various statistics of all our TensorFlow variables over time. This graph is showing a track of the means of the various weight and bias matrixes.

TensorBoard also lets us look at the distribution of the matrix values over time.

TensorBoard also lets us look at histograms of the data and how those histograms evolve over time.

You can see how the layer 1 weights start as their seeded normal distribution of random numbers and then progress to their new values as training progresses. If you look at all these graphs you can see that the values are still progressing when training stops. This is because TensorBoard instrumentation really slows down processing, so I shortened the training steps while using TensorBoard. I could let it run much longer over night to ensure that I am providing sufficient training for all the values to settle down.

Program Instrumentation

Rather than include all the code here, check out the Google Drive for the Python source file. But quickly we added a function to get all the statistics on a variable:

def variable_summaries(var, name):

"""Attach a lot of summaries to a Tensor."""

with tf.name_scope('summaries'):

mean = tf.reduce_mean(var)

tf.scalar_summary('mean/' + name, mean)

with tf.name_scope('stddev'):

stddev = tf.sqrt(tf.reduce_mean(tf.square(var - mean)))

tf.scalar_summary('stddev/' + name, stddev)

tf.scalar_summary('max/' + name, tf.reduce_max(var))

tf.scalar_summary('min/' + name, tf.reduce_min(var))

tf.histogram_summary(name, var)

We define names in the various section and indicate the data we want to collect:

with tf.name_scope('Layer1'):

with tf.name_scope('weights'):

layer1_weights = tf.Variable(tf.truncated_normal(

[NHistData * num_stocks * 2, num_hidden], stddev=0.1))

variable_summaries(layer1_weights, 'Layer1' + '/weights')

with tf.name_scope('biases'):

layer1_biases = tf.Variable(tf.zeros([num_hidden]))

variable_summaries(layer1_biases, 'Layer1' + '/biases')

Before the call to initialize_all_variables we need to call:

merged = tf.merge_all_summaries()

test_writer = tf.train.SummaryWriter('/tmp/tf/test',

session.graph )

And then during training:

summary, _, l, predictions = session.run(

[merged, optimizer, loss, train_prediction], feed_dict=feed_dict)

test_writer.add_summary(summary, i)

Summary

TensorBoard is quite a good tool to give you insight into what is going on in your model. Whether the program is correctly doing what you think and whether there is any sanity to the data. It also lets you tune the various parameters to ensure you are getting best results.

The Road to TensorFlow – Part 2: Python

Introduction

This is part 2 on my blog series on playing with TensorFlow. Last time I blogged on getting Linux going in a VM. This time we will be talking about the Python programming language. The API for TensorFlow is primarily aimed at Python and in fact much of the research in AI, scientific computing, numerical computing and data research all takes place in Python. There is a C++ API as well, but it seems like a good chance to give Python a try.

Python is an interpreted language that is very rich in supporting various programming paradigms like object oriented, procedural and functional. Python is open source and runs on many platforms. Most Linux’s and the MacOS come with some version of Python pre-installed. Python is very interoperable and can work with most other programming systems, and there are a huge number of libraries of functionality available to the Python programmer. Python is oriented to getting things done quickly with a minimum of code and a minimum of fuss. The name Python is a tribute to the comedy troupe Monty Python and there are many references to Monty Python throughout the documentation.

Installation and Versions

Although I generally like Python it has one really big problem that is generally a pain in the ass when setting up new systems and browsing documentation. The newest version of Python as of this writing is 3.5.2 which is the one I wanted to use along with all the attendant libraries. However, if you type python in a terminal window you get 2.7.12. This is because when Python went to version 3 it broke source code compatibility. So they made the decision to maintain version 2 going forwards while everyone updated their programs and scripts to version 3. Version 3.0 was released in 2008 and this mess is still going on eight years later. The latest Python 2.x, namely 2.7.12 was just released in June 2016 and seems to be quite actively developed by a good sized community. So generally to get anything Python 3.x you need to add a 3 to the end. So to run Python 3.5.2 in a terminal window you type python3. Similarly, the IDE is IDLE3 and the package installer is pip3. It makes it very easy to make a mistake an to get the wrong thing. Worse the naming isn’t entirely consistent across all packages, there are several that I’ve run into where you add a 2 for the 2.x version and the version 3 one is just the name. As a result, I always get a certain amount of Python 2.x stuff accidentally installed by mistake (which doesn’t hurt anything, just wastes time and disk space). This also leads to a bit of confusion when you Google for information, in that you have to be careful to get 3.x info rather than 2.x info as the wrong one may or may not work and may or may not be a best practice.

On Ubuntu Linux I just used apt-get to install the various packages I needed. I’ll talk about these a bit more in the next posting. Another option for installing Python and all the scientific libraries is to use the Anaconda distribution which is quite a good way to get everything in Python installed all at once. I used Anaconda to install Python on Windows 10 at it worked really well, you just don’t get the fine control of what it does and it creates a separate installation to keep everything separate from anything already installed.

Python the Language

Python is a very large language; it has everything from object orientation to functional programming to huge built in libraries. It does have a number of quirks though. For instance, the way you define blocks is via indentation rather than using curly brackets or perhaps end block statements. So indentation isn’t just a style guideline, it’s fundamental to how the program works. In the following bit of code:

for i in range(10):

a = i * 8

print( i, a )

a = 8

the two indented statements are part of the for loop and the out-dented assignment is outside the loop. You don’t define variables, they are defined when first assigned to, and you can’t use a variable without assigning it first (or an exception will be thrown). There are a lot of built in types including dictionaries and lists, but no array type (but the numpy library does add these). Notice how the for loop uses in rather than to, to do a basic loop.

I don’t want to get too much into the language since it is quite large. If you are interested there are many good sites on the web to teach Python and the O’Reilly book “Learning Python” is recommended (but quite long).

Since Python is interpreted, you don’t need to wait for any compile steps so the coding, testing, debugging cycle is quite quick. Writing tight loops in Python will be slower than C, but generally Python gives you quite good libraries to do most of what you want and the libraries tend to be written in C or Fortran and very fast. So far I haven’t found speed to be an issue. TensorFlow is also written in C for speed, plus it has the ability to run on NVidia graphics cards for an extra boost.

Summary

This was my quick intro to Python. I’ll talk more about relevant parts of Python as I go along in this series. I generally like Python and so far my only big complaint is the confusion between the version 2 world and the version 3 world.

The Road to TensorFlow – Part 1 Linux

Introduction

There have been some remarkable advancements in Artificial Intelligence type algorithms lately. I blogged on this a little while ago here. Whether its computers reading hand-writing, understanding speech, driving cars or winning at games like Go, there seems to be a continual flood of stories of new amazing accomplishments. I thought I’d spend a bit of time getting to know how this was all coming about by doing a bit of reading and playing with the various technologies.

I wanted to play with Neural Network technology, so thought the Google TensorFlow open source toolkit would be a good place to start. This led me down the road to quite a few new (to me) technologies. So I thought I’d write a few blog posts on my road to getting some working TensorFlow programs. This might take quite a few articles covering Linux, Python, Python libraries like Pandas, Stock Market technical analysis, and then TensorFlow.

Linux

The first obstacle I ran into was that TensorFlow had no install image for Windows, after a bit of Googling, I found you need to run it on MacOS or Linux. I haven’t played with Linux in a few years and I’d been meaning to give it a try.

I happened to have just read about a web site osboxes.org that provides VirtualBox and VMWare images of all sorts of versions of Linux all ready to go. So I thought I’d give this a try. I downloaded and installed VirtualBox and downloaded a copy of 64Bit Ubuntu Linux. Since I didn’t choose anything special I got Canonical’s Unity Desktop. Since I was trying new things, I figured oh well, lets get going.

Things went pretty well at first, I figured out how to install things on Ubuntu which uses APT (Advanced Packaging Tool) which is a command line utility to install things into Ubuntu Linux. This worked pretty well and the only problems I had were particular to installing Python which I’ll talk about when I get to Python. I got TensorFlow installed and was able to complete the tutorial, I got the IDLE3 IDE for Python going and all seemed good and I felt I was making good progress.

Then Ubuntu installed an Ubuntu update for me (which like Windows is run automatically by default). This updated many packages on my virtual image. And in the process broke the Unity desktop. Now the desktop wouldn’t come up and all I could do was run a single terminal window. So at least I could get my work off the machine. I Googled the problem and many people had it, but none of the solutions worked for me and I couldn’t resolve the problem. I don’t know if its just that Unity is finicky and buggy or if it’s a problem with running in a VirtualBox VM. Perhaps something with video drivers, who knows.

Anyway I figured to heck with Ubuntu and switched to Red Hat’s Fedora Linux. I chose a standard simple Gnome desktop and swore to never touch Unity again. I also realized that now I’m retired, I’m not a commercial user, so I can freely use VMWare, so I also switched to VMWare since I wondered if my previous problem was caused by VirtualBox. Anyway installing TensorFlow on Fedora seemed to be quite difficult. The dependencies in the TensorFlow install assume the packages that Ubuntu installs by default and apparently these are quite different that Fedora. So after madly installing things that I didn’t really think were necessary (like the Gnu Fortran compiler), I gave up on Fedora.

So I went back to osboxes.org and downloaded an Ubuntu image with the Gnome desktop. This then has been working great. I got everything re-installed quite quickly and was back to being productive. I like Gnome much better than Unity and I haven’t had any problems. Similarly, I think VMWare works a bit better than VirtalBox and I think I get a bit better performance in this configuration.

I have Python along with all the Python scientific and numerical computing libraries working. I have TensorFlow working. I spend most of my time in Terminal windows and the IDLE3 IDE, but occasionally use FireFox and some of the other programs pre-installed with the distribution.

I’m greatly enjoying working with Linux again, and I’m considering replacing my currently broken desktop computer with something inexpensive natively running Linux. I haven’t really enjoyed the direction Windows has taken after Windows 7 and I’m thinking of perhaps doing most of my computing on Linux and MacOS.

Summary

I am enjoying using Linux again. In spite of my initial problems with Ubuntu’s Unity Desktop and then with Fedora (running TensorFlow). Now that I have a good system that seems to be stable and working well I’m pretty happy with it. I’m also glad to be free of things like App stores and its nice to feel in control of my environment when running Linux. Anyway this was the small first step to TensorFlow.